Reading the sample data from Azure Data Lake Storage

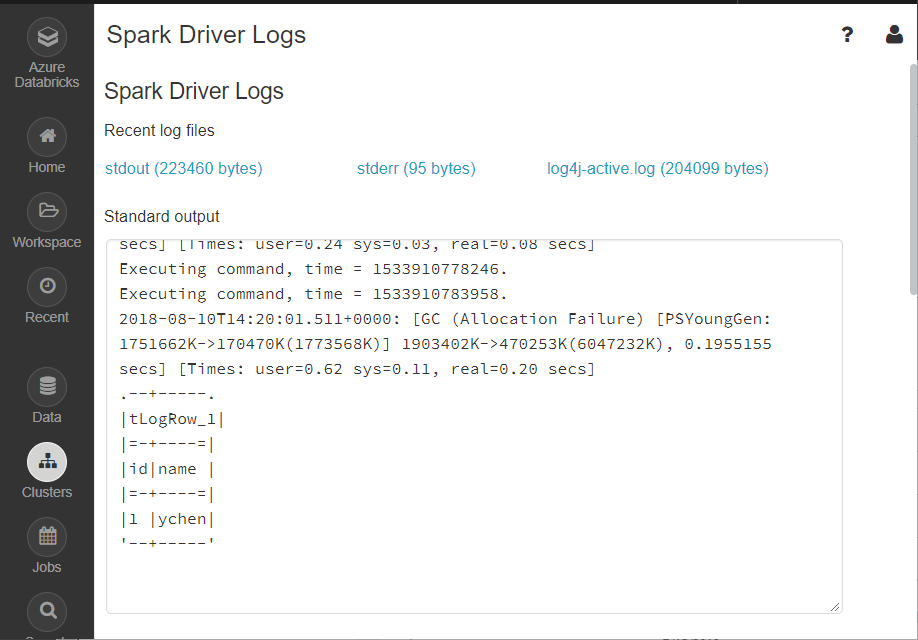

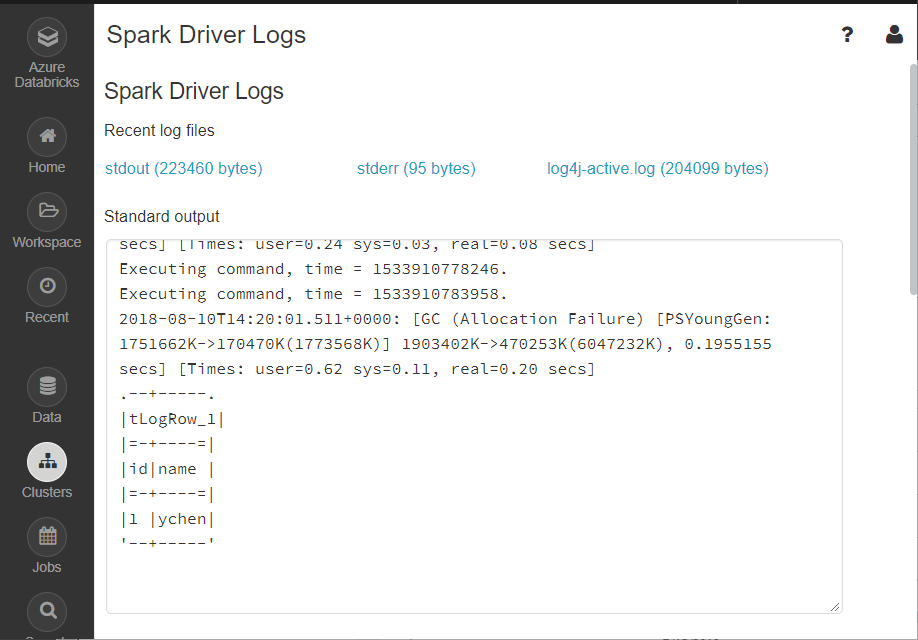

Procedure

Results

Did this page help you?

If you find any issues with this page or its content – a typo, a missing step, or a technical error – let us know how we can improve!

If you find any issues with this page or its content – a typo, a missing step, or a technical error – let us know how we can improve!