Recuperating valid and invalid rows in a column analysis

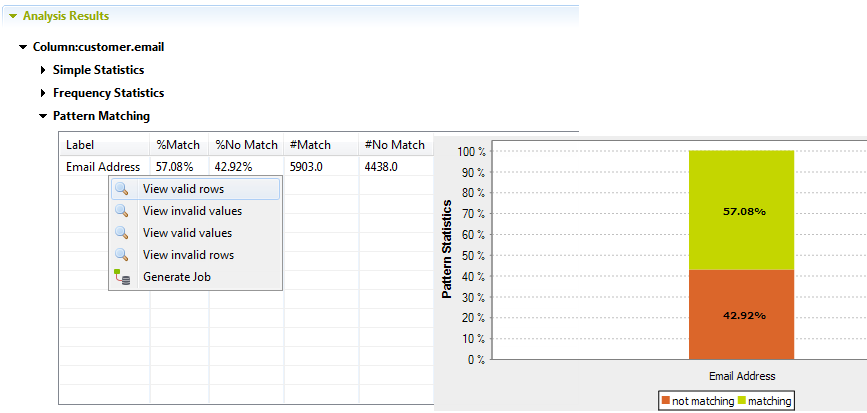

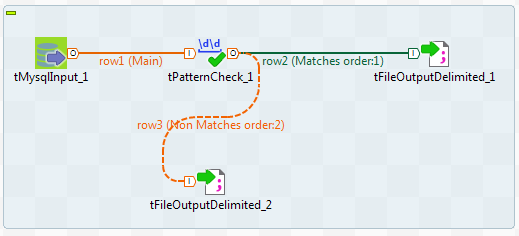

You can generate a ready-to-use Job on the results of a column analysis. This Job

recuperates the valid/invalid rows or both types of rows and writes them in output files

or databases.

Before you begin

Procedure

Did this page help you?

If you find any issues with this page or its content – a typo, a missing step, or a technical error – let us know how we can improve!