Write data to HDFS

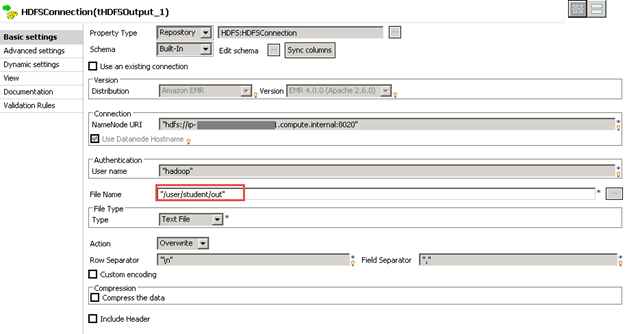

In this example, use a Standard Job to write data to HDFS.

Procedure

Did this page help you?

If you find any issues with this page or its content – a typo, a missing step, or a technical error – let us know how we can improve!