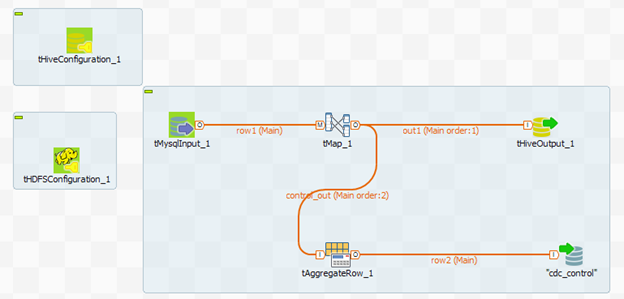

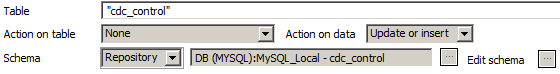

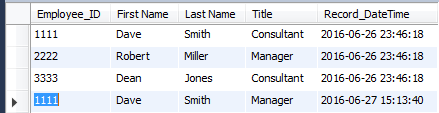

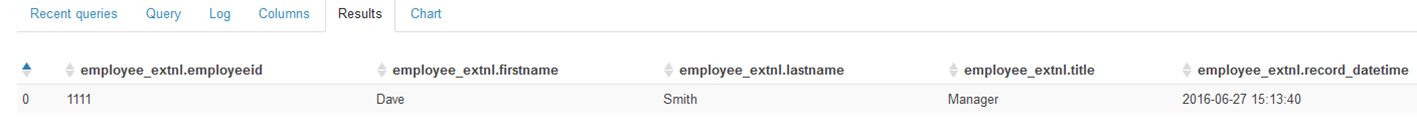

Step 2: Loading changes from the source database table into the Hive external table

This step reads only the changes from the source database table and loads them into the Hive external table employee_extnl.

Procedure

Did this page help you?

If you find any issues with this page or its content – a typo, a missing step, or a technical error – let us know how we can improve!