Creating a new Hadoop configuration context outside Talend Studio (optional)

You can contextualize the Hadoop connection for a Job without using Talend Studio.

When you do not have Talend Studio at hand but need to deploy a Job in a Hadoop environment different from the Hadoop environments already defined for this Job, you can take the manual approach to add a new Hadoop connection context.

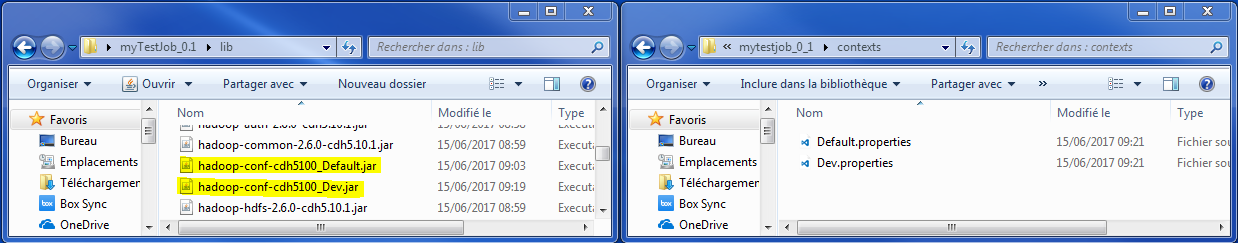

If a Job is using a contextualized Hadoop connection that has two contexts, for example Default and Dev, after being built out of Talend Studio, the lib folder of the built artifact (the Job zip) contains two special jars for the given Hadoop environments. The name of these jars follows a pattern: "hadoop-conf-[name_of_the_metadata_in_the_repository]_[name_of_the_context].jar".

The jar to be used at runtime is defined by the context used in the command you can read from the .bat file or the .sh file of the Job.

The following line is an example of this command, which calls the Default context:

java -Xms256M -Xmx1024M -cp .;../lib/routines.jar;../lib/antlr-runtime-3.5.2.jar;../lib/avro-1.7.6-cdh5.10.1.jar;../lib/commons-cli-1.2.jar;../lib/commons-codec-1.9.jar;../lib/commons-collections-3.2.2.jar;../lib/commons-configuration-1.6.jar;../lib/commons-lang-2.6.jar;../lib/commons-logging-1.2.jar;../lib/dom4j-1.6.1.jar;../lib/guava-12.0.1.jar;../lib/hadoop-auth-2.6.0-cdh5.10.1.jar;../lib/hadoop-common-2.6.0-cdh5.10.1.jar;../lib/hadoop-hdfs-2.6.0-cdh5.10.1.jar;../lib/htrace-core4-4.0.1-incubating.jar;../lib/httpclient-4.3.3.jar;../lib/httpcore-4.3.3.jar;../lib/jackson-core-asl-1.8.8.jar;../lib/jackson-mapper-asl-1.8.8.jar;../lib/jersey-core-1.9.jar;../lib/log4j-1.2.16.jar;../lib/log4j-1.2.17.jar;../lib/org.talend.dataquality.parser.jar;../lib/protobuf-java-2.5.0.jar;../lib/servlet-api-2.5.jar;../lib/slf4j-api-1.7.5.jar;../lib/slf4j-log4j12-1.7.5.jar;../lib/talend_file_enhanced_20070724.jar;mytestjob_0_1.jar; local_project.mytestjob_0_1.myTestJob --context=Default %*In this example, switching from Default to Dev results in changing the Hadoop configuration which will be loaded in the Job at runtime.

Did this page help you?

If you find any issues with this page or its content – a typo, a missing step, or a technical error – let us know how we can improve!