Filtering customer data based on valid and invalid semantic types

Before you begin

-

You have previously created a connection to the system storing your source data.

Here, a Test connection.

-

You have previously added the dataset holding your source data.

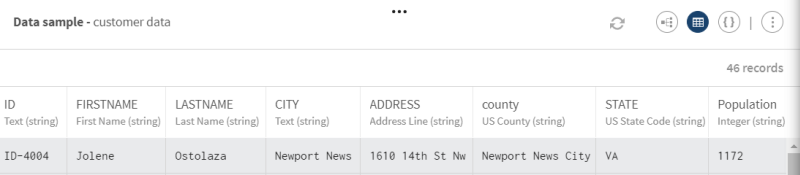

Download and extract the file: semantic_filter-customers.zip. It contains a list of customers with raw data that you can find attached to this document.

-

You also have created the connection and the related dataset that will hold the processed data.

Here the files will also be stored in two Test datasets.

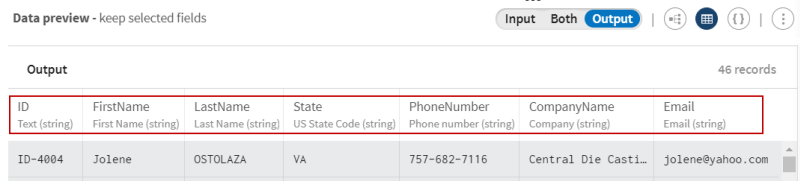

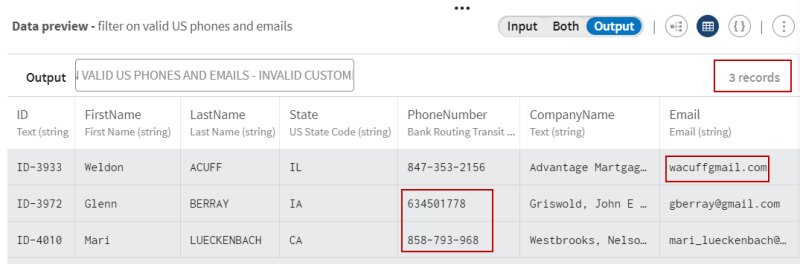

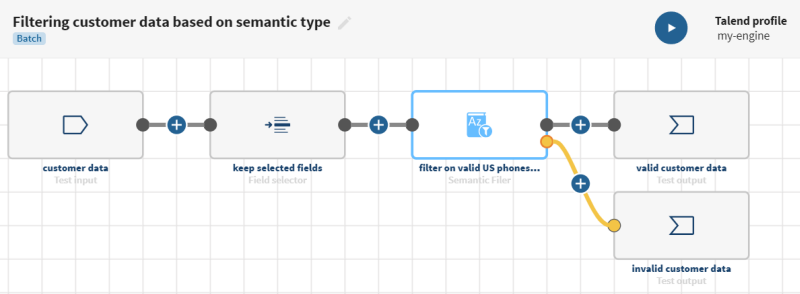

Procedure

Results

Your pipeline is being executed, the data is filtered according to the semantic types you have selected and the output flows are sent to the destinations you have indicated.

What to do next

Did this page help you?

If you find any issues with this page or its content – a typo, a missing step, or a technical error – let us know how we can improve!