Remote Engine Gen2 architecture

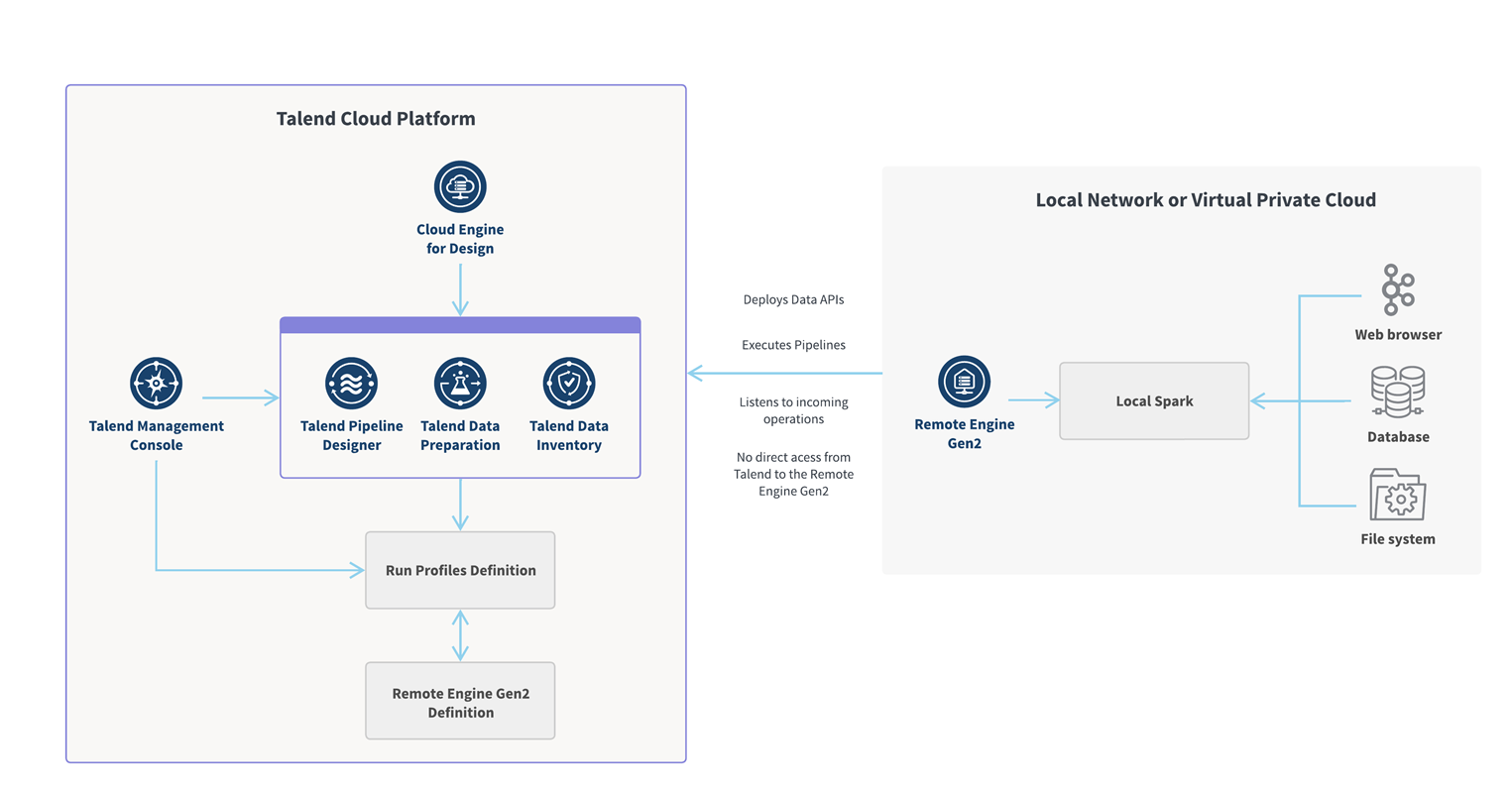

The diagram is divided into two main parts: the Talend Cloud infrastructure and the customer's local network or Virtual Private Cloud (VPC).

Cloud infrastructure

- In Talend Management Console, you can administrate roles, users, projects, engines, and licenses. Talend Management Console is also used to define the Remote Engine Gen2 as well as the corresponding run profiles in which you can customize the resources allocated to the executions.

- The Dataset service is what provides the unified dataset list within Talend Cloud.

Talend Cloud Data Inventory

is the central place where you access and maintain your dataset collection.

Talend Cloud Data Preparation and Talend Cloud Pipeline Designer are the two other applications that benefit from the common dataset inventory, and allow you to cleanse or transform your data.

-

The Cloud Engine for Design and its corresponding run profile come embedded by default in Talend Management Console to help users quickly get started with the apps, but it is recommended to install the secure Remote Engine Gen2 for advanced processing of data.

These engines are used to run artifacts, tasks, preparations, and pipelines in the cloud, as well as creating connections and fetching data samples.

Customer's Virtual Private Cloud

Your Virtual Private Cloud includes the Remote Engine Gen2 that is used to run pipelines and preparations in a secure way.

The Remote Engine Gen2 ensures a secure access to your data stored on Kafka, databases, file systems, etc. and executes your artifacts on a local Spark engine (default).

Did this page help you?

If you find any issues with this page or its content – a typo, a missing step, or a technical error – let us know how we can improve!