Setting reusable Hadoop properties

About this task

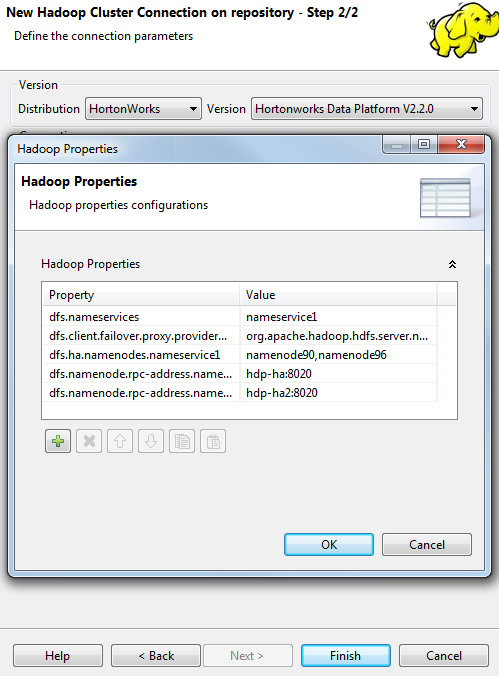

When setting up a Hadoop connection, you can define a set of common Hadoop properties that will be reused by its child connections to each individual Hadoop element such as Hive, HDFS or HBase.

For example, in the Hadoop cluster you need to use, you have defined the HDFS High Availability (HA) feature in the hdfs-site.xml file of the cluster itself; then you need to set the corresponding properties in the connection wizard in order to enable this High Availability feature in Talend Studio. Note that these properties can also be set in a specific Hadoop related component and the process of doing this is explained in the article about Enabling the HDFS High Availability feature in Talend Studio. In this section, only the connection wizard approach is presented.

Prerequisites:

-

Launch the Hadoop distribution you need to use and ensure that you have the proper access permission to that distribution.

-

The High Availability properties to be set in Talend Studio have been defined in the hdfs-site.xml file of the cluster to be used.

<property>

<name>dfs.nameservices</name>

<value>nameservice1</value>

</property>

<property>

<name>dfs.client.failover.proxy.provider.nameservice1</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<name>dfs.ha.namenodes.nameservice1</name>

<value>namenode90,namenode96</value>

</property>

<property>

<name>dfs.namenode.rpc-address.nameservice1.namenode90</name>

<value>hdp-ha:8020</value>

</property>

<property>

<name>dfs.namenode.rpc-address.nameservice1.namenode96</name>

<value>hdp-ha2:8020</value>

</property>The values of these properties are for demonstration purposes only.

To set these properties in the Hadoop connection, open the Hadoop Cluster Connection wizard from the Hadoop cluster node of the Repository. For further information about how to access this wizard, see Centralizing a Hadoop connection.

Procedure

Results

This way, these properties can be automatically reused by any of its child Hadoop connection.

The image above shows these properties inherited in the Hive connection wizard. For further information about how to access the Hive connection wizard as presented in this section, see Centralizing Hive metadata.

Did this page help you?

If you find any issues with this page or its content – a typo, a missing step, or a technical error – let us know how we can improve!