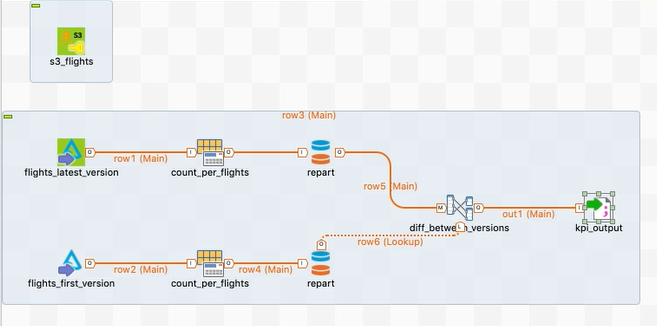

Computing the day-over-day evolution of US flights using a related Delta Lake dataset

The Job in this scenario uses a sample Delta Lake dataset to calculate the day-over-day Key Performance Index (KPI) of US flights.

For more technologies supported by Talend, see Talend components.

Prerequisite:

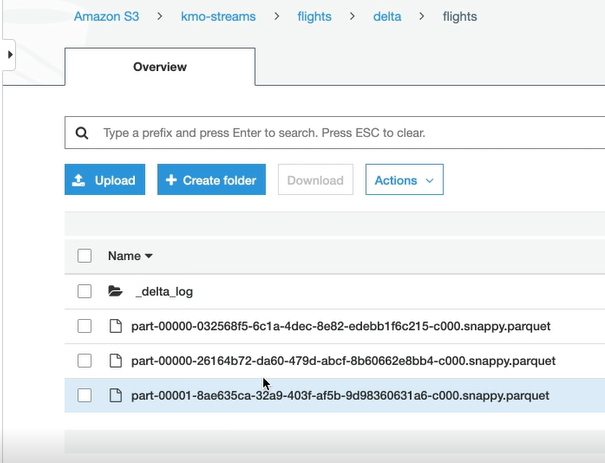

- The filesystem to be used with Delta Lake must be S3, Azure or HDFS.

- Ensure that the credentials to be used have the read/write rights and permissions to this filesystem.

- The sample Delta Lake dataset to be used has been donwload from Talend Help Center and stored on your filesystem. This dataset is used for demonstration purposes only; it contains two snapshots of US flights per date, implicating the evolution of these flights on each date.

Although not always required, it is recommended to install a Talend Jobserver on the edge node of your Hadoop cluster. Then in the Preferences dialog box in your Studio or in Talend Administration Center if available to you to run your Jobs, define this Jobserver as the remote execution server of your Jobs.

Did this page help you?

If you find any issues with this page or its content – a typo, a missing step, or a technical error – let us know how we can improve!