Hiding sensitive information provided in the context of your Spark Job

When you execute your Talend Studio Jobs for

Apache Spark on a Talend JobServer, if this Job uses a context and your user password is defined in this context, the

Talend JobServer may fail to hide the password from its Talend CommandLine

terminal.

Your Job runs on one of the following clusters:

- Microsoft HDInsight

- Google Cloud Dataproc

- Cloudera Altus

- Databricks

- Qubole

- All the other supported distributions when they run on Yarn Cluster.

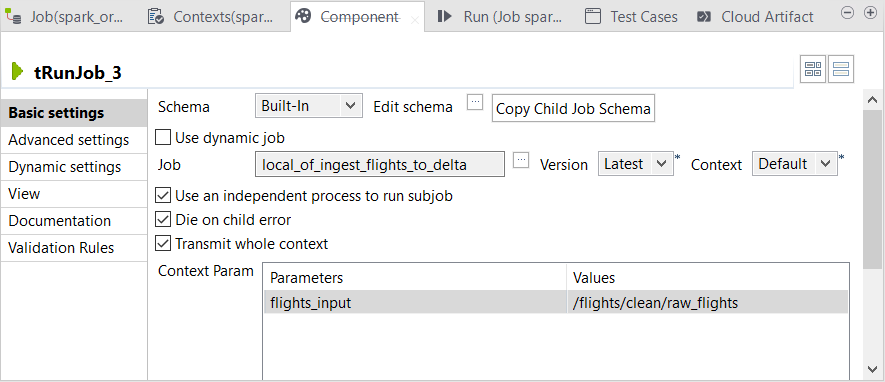

to import

these contexts to your Job.

to import

these contexts to your Job.