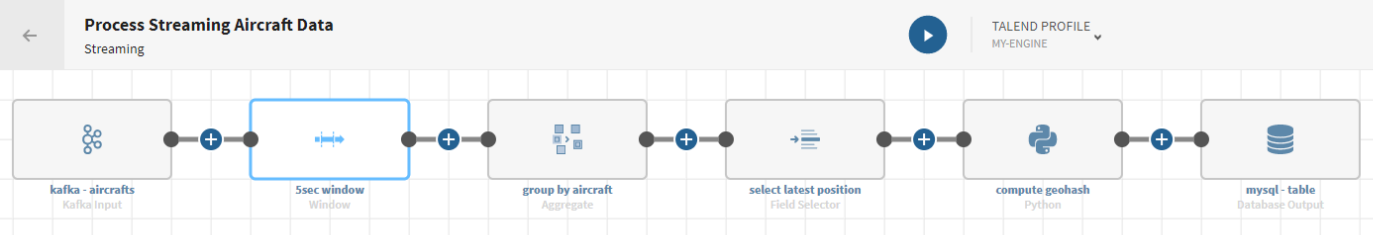

Processing streaming aircraft data

Before you begin

-

You have previously created a connection to the system storing your source data.

-

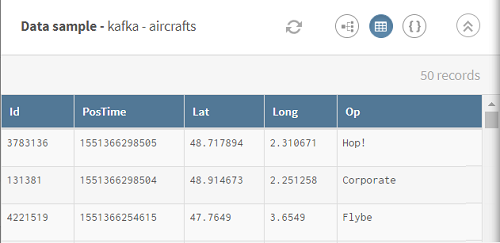

You have previously added the dataset holding your source data.

Here, streaming aircraft data including aircraft IDs, position, and timestamp.

To help you understand this scenario, here is the AVRO schema of the streaming data used in this scenario:{ "type": "record", "name": "aircrafts", "fields": [ {"name": "Id", "type": "int"}, {"name": "PosTime", "type": "long"}, {"name": "Lat", "type": "double"}, {"name": "Long", "type": "double"}, {"name": "Op", "type": "string"} ] }where Id corresponds to Aircraft identifiers, PosTime corresponds to the Timestamp of the position, Lat/Long correspond to the aircraft latitude/longitude and Op corresponds to Airline companies.

-

You also have created the connection and the related dataset that will hold the processed data.

Here, a MySQL table.

Procedure

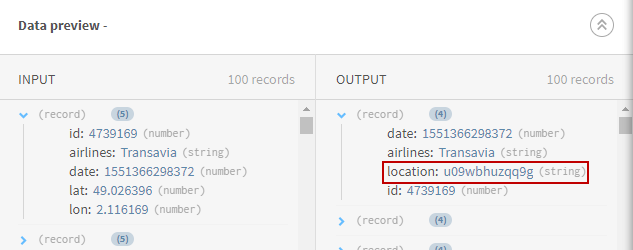

Results

Your streaming pipeline is being executed and will run until you decide to terminate it. The aircraft data is modified and the calculated geohash information is sent to the target system you have indicated.

Did this page help you?

If you find any issues with this page or its content – a typo, a missing step, or a technical error – let us know how we can improve!