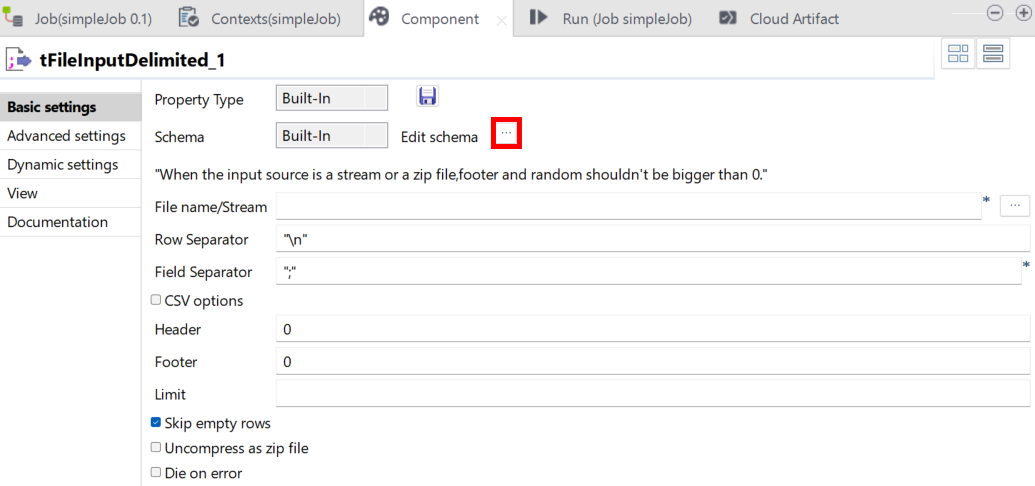

Reading data from a HDFS connection on Spark

Using predefined HDFS metadata, you can read data from a HDFS filesystem on Spark.

Before you begin

- This tutorial makes use of a Hadoop cluster. You must have a Hadoop cluster available to you.

- You must also have HDFS metadata configured (see Creating a Hadoop cluster metadata definition and Importing a Hadoop cluster metadata definition).

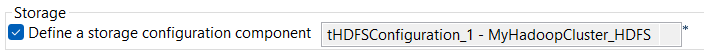

- You must have configured your HDFS connection on Spark (see Configuring a HDFS connection to run on Spark).