Profiling an ADLS Databricks file via Hive

From the Profiling perspective of Talend Studio, you can generate a column analysis on an ADLS Databricks file through Hive.

- Download the JDBC driver and add it to Talend Studio.

- Create a JDBC connection to the ADLS cluster.

- Create a column analysis with simple indicators on the table and columns.

You can then modify the analysis settings and add other indicators as needed. You can also create other analyses later on this ADLS file by using the same Hive table.

Downloading the JDBC driver and adding it to Talend Studio

The JDBC driver is a jar file that you need to create a JDBC connection.

Procedure

Results

Connecting to an ADLS Databricks Gen2 file

You need to create an external table and set up a parameter to profile an ADLS Databricks Gen2 file.

Before you begin

To create an external table, you can also use the tAzureFSConfiguration and tJava components.

About this task

Procedure

Creating a connection to an ADLS Databricks cluster

Before you begin

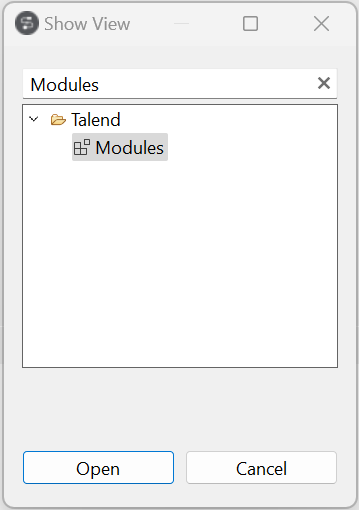

- You have selected the Profiling perspective.

- You have followed the procedure Downloading the JDBC driver and adding it to Talend Studio.

About this task

Procedure

Creating a profiling analysis on an ADLS Databricks file via Hive

After creating a connection to an ADLS Databricks cluster via Hive, you can create a profiling analysis on a specific file.

Before you begin

- You have selected the Profiling perspective.

- You have followed the procedure Creating a

connection to an ADLS Databricks cluster.

For more information on column analysis, see Column analyses.

.

.