What's new in R2021-01

Big Data: new features

|

Feature |

Description |

Available in |

|---|---|---|

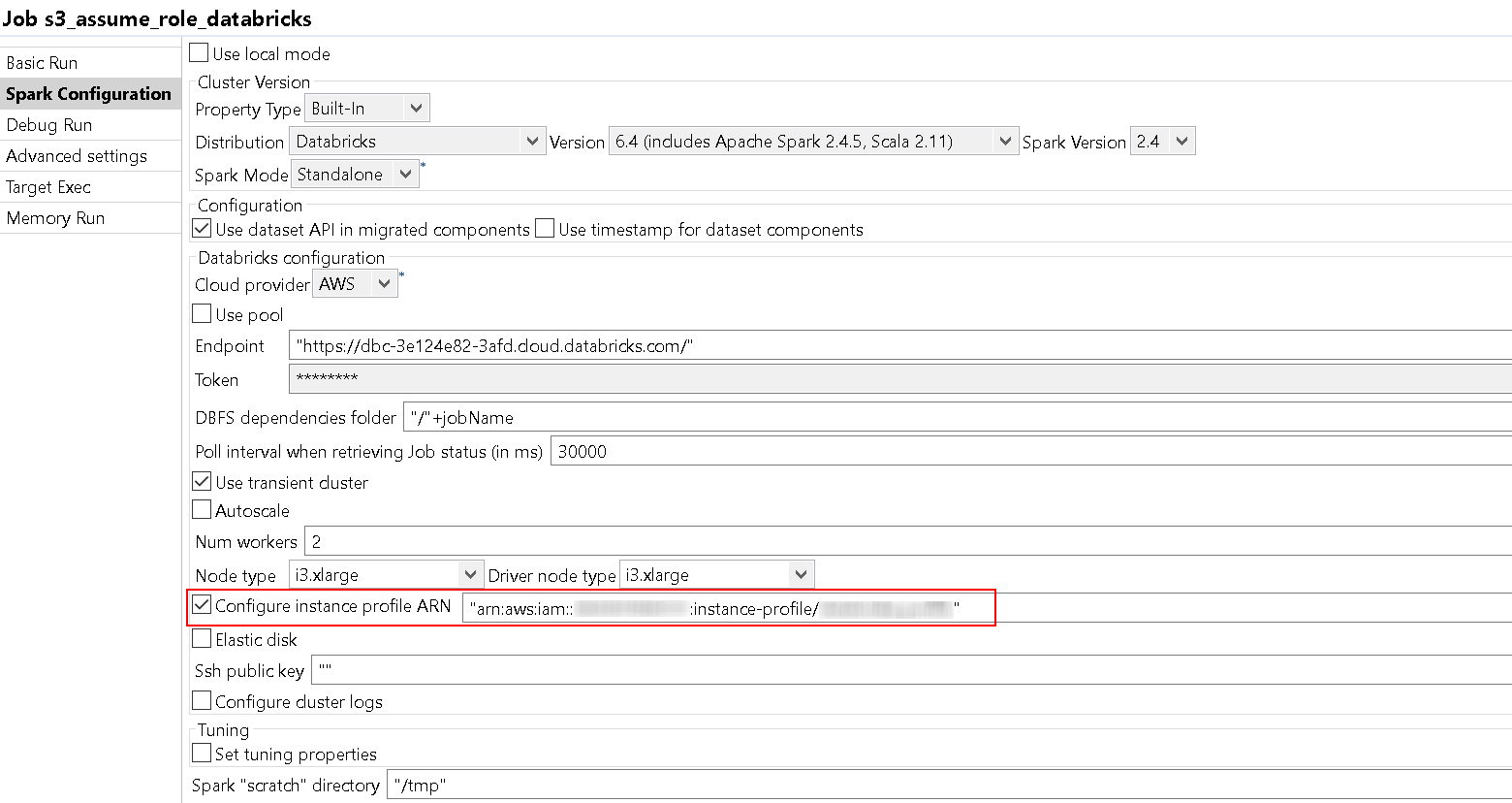

| Assume Role configuration for Databricks 5.5 LTS and 6.4 distributions |

When you are running a Job on Databricks 5.5 LTS or 6.4 and you want to write and read data from S3, you can now make your Job temporarily assume a role and the permissions associated with this role. This allows you not to specify the secret and access keys to Databricks clusters in the tS3Configuration component. You now only have to specify the Amazon Resource Name (ARN) of the role to assume in the Spark configuration view and enter the bucket name then select the Inherit credentials from AWS check box in the Basic settings view of the tS3Configuration component.

|

All Talend products with Big Data |

| Basic Assume Role configuration in tS3Configuration component | When you enable the Assume Role option in the

tS3Configuration component, you can now configure the following properties

from the Basic settings view to fine

tune your configuration:

This feature is now available for the CDP Private Cloud Base 7.1 distribution. |

All Talend products with Big Data |

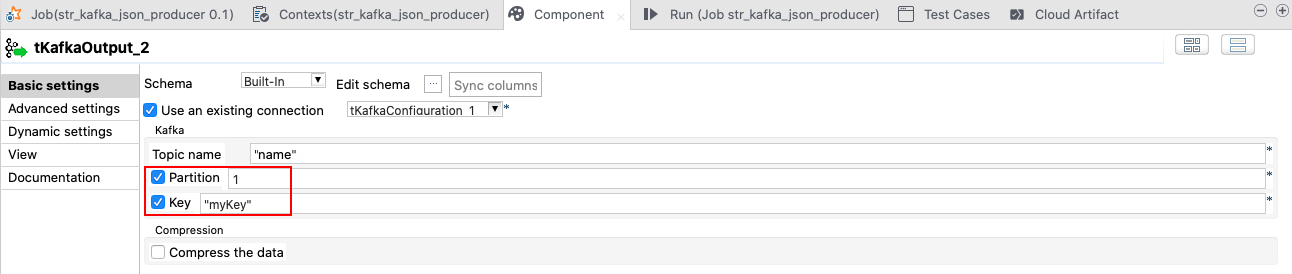

| Topic, partition, and key options available in Kafka components | You can now add information about the key and the

partition used for the messages in the tKafkaOutput component. The

tKafkaInput component will read these information in its output schema

thanks to the following new attributes: topic, partition, and

key. This feature allows you to retrieve and show more information in the Kafka message from the topic.

|

All Talend products with Big Data |

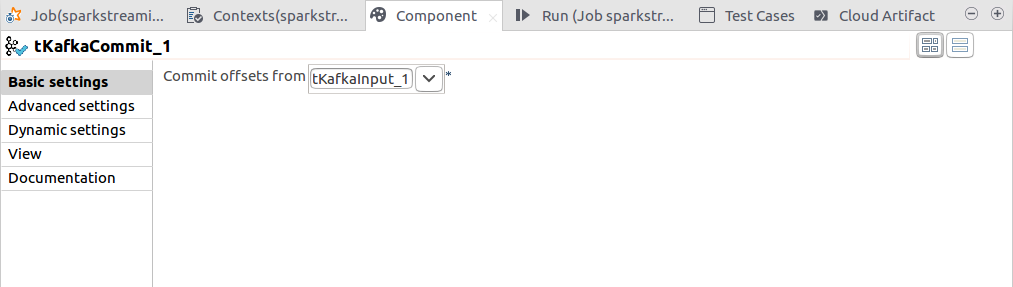

| tKafkaCommit available in Spark Streaming Jobs | You can now use the tKafkaCommit component in your

Spark Streaming Jobs with Spark v2.0 and onwards in the Local Spark mode.

This component allows you to manually control when the offset is commited.

It enables to have a commit in one go rather than having an auto-commit at a

given time interval.

|

All Talend products with Big Data |

| Deprecated distributions | The following distributions are now deprecated:

|

All Talend products with Big Data |

Data Integration: new features

|

Feature |

Description |

Available in |

|---|---|---|

| Shared mode for Talend Studio | Talend Studio now supports the shared mode, which allows each user on the machine where Talend Studio is installed to work with different configuration and workspace folders. |

All Talend products with Talend Studio |

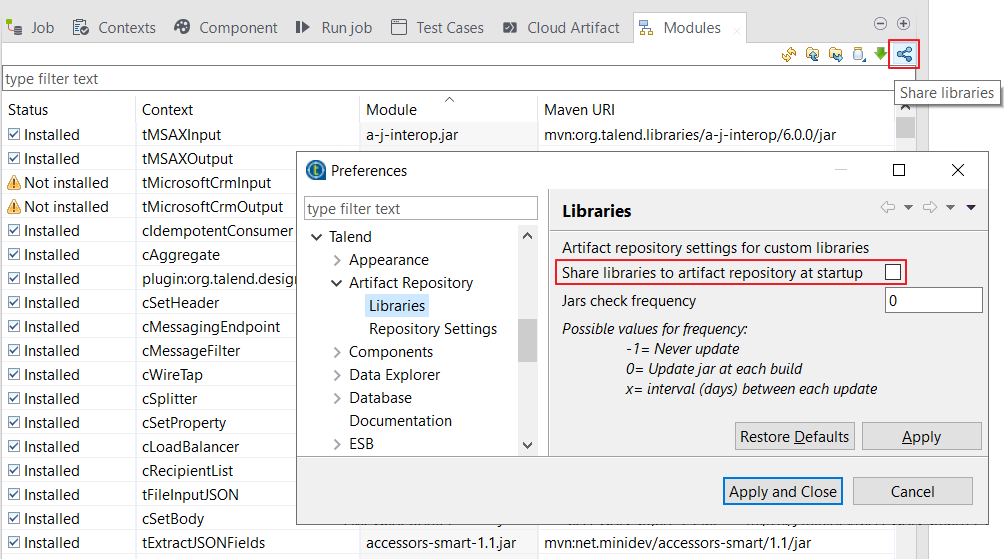

| Libraries sharing enhancement |

Talend Studio now supports:

By default, the libraries are not shared at Talend Studio startup to improve the startup performance.

|

All Talend products with Talend Studio |

|

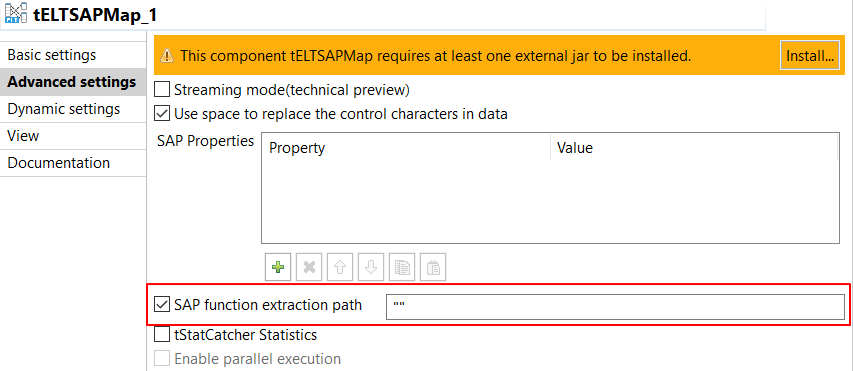

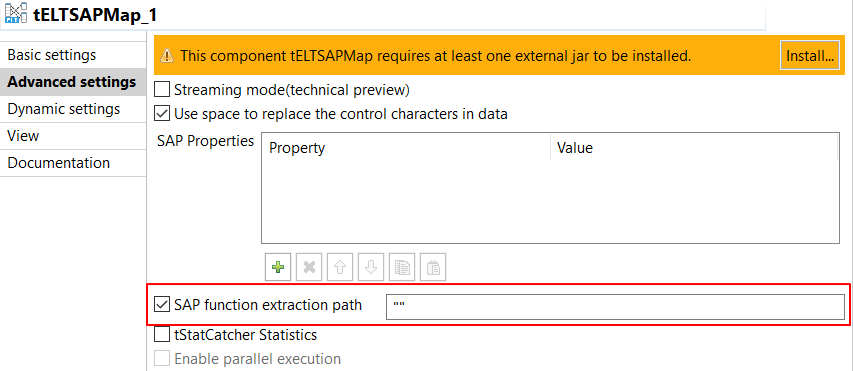

SAP function extraction path customizable |

You can specify the path for the SAP function to generate the files that hold the data extracted. Components applied:

|

All Talend products with Talend Studio |

|

tGPGDecrypt: specifying additional parameters for the GPG decrypt command |

The Use extra parameters option is provided, allowing you to specify additional parameters for the GPG decrypt command.

|

All Talend products with Talend Studio |

|

Support for Greenplum 6.x |

This release provides support for Greenplum 6.x. |

All Talend products with Talend Studio |

|

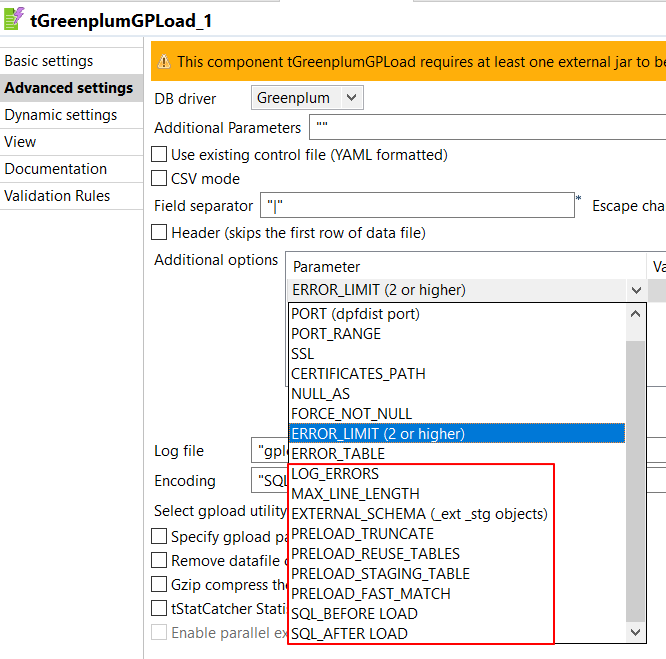

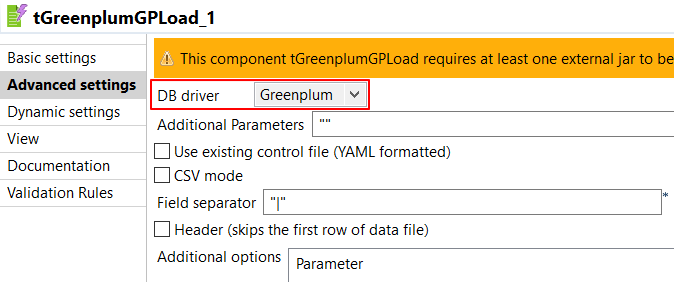

Greenplum components: the default Database driver changed |

For Greenplum components, the database driver defaults to Greenplum.

|

All Talend products with Talend Studio |

|

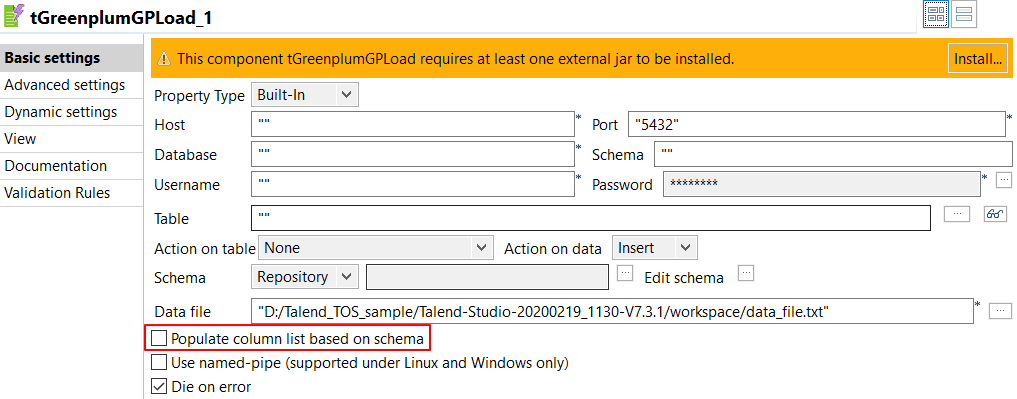

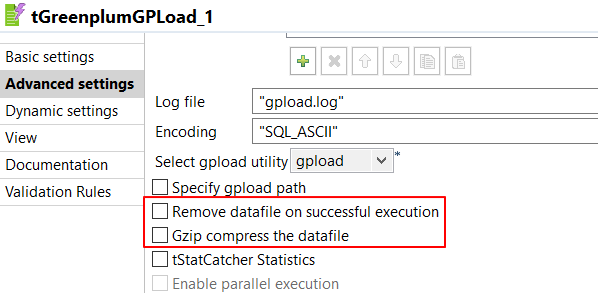

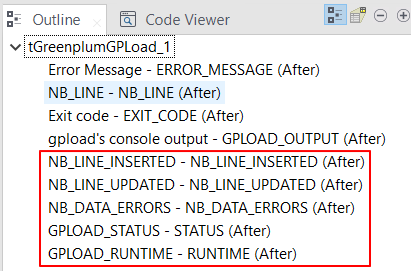

tGreenplumGPLoad improved |

Multiple new features/options are added to tGreenplumGPLoad. As listed below.

|

All Talend products with Talend Studio |

Data Quality: new features

|

Feature |

Description |

Available in |

|---|---|---|

| Shared mode |

Talend Studio now supports the shared mode. If you enable it, some

paths change:

|

All Talend Platform and Data Fabric products |

| Supported databases | SAP Hana is now supported in the Profiling perspective for Table, View, and Calculation view schemas. |

All Talend Platform and Data Fabric products |

| New components |

The tSAPHanaValidRows and tSAPHanaInvalidRows components check SAP Hana database rows against specific data quality patterns (regular expression) or data quality rules (business rule). |

All Talend Platform and Data Fabric products |

| tDataMasking tDataUnmasking |

The Dynamic data type is now supported by the Standard component. |

All Talend Platform and Data Fabric products |

Did this page help you?

If you find any issues with this page or its content – a typo, a missing step, or a technical error – let us know how we can improve!