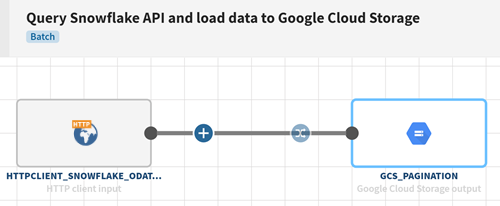

Querying the Snowflake API and sending the data to Google Cloud Storage

Before you begin

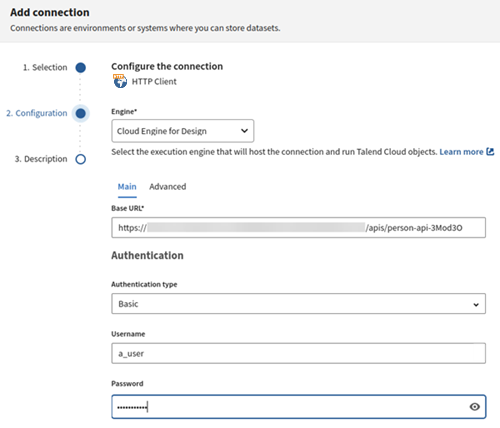

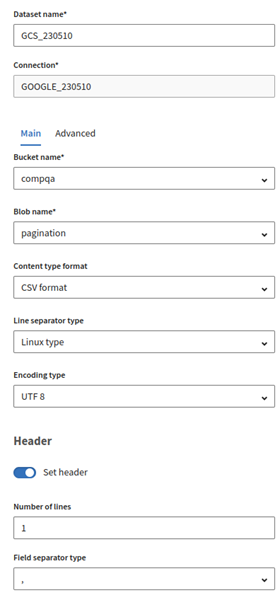

Procedure

Results

Your pipeline is being executed. All rows from the 11th row of the Snowflake table are copied into a file in Google Cloud Storage, and rows are retrieved 5 by 5 through the ODATA API.

Did this page help you?

If you find any issues with this page or its content – a typo, a missing step, or a technical error – let us know how we can improve!