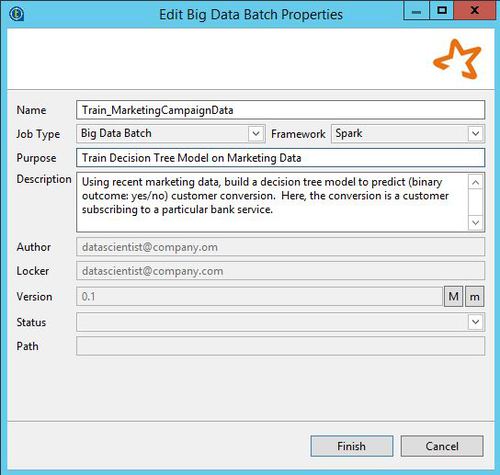

Creating a Spark Job for machine learning

This sections explains how to create a Spark Job to develop a machine learning routine.

Procedure

Did this page help you?

If you find any issues with this page or its content – a typo, a missing step, or a technical error – let us know how we can improve!