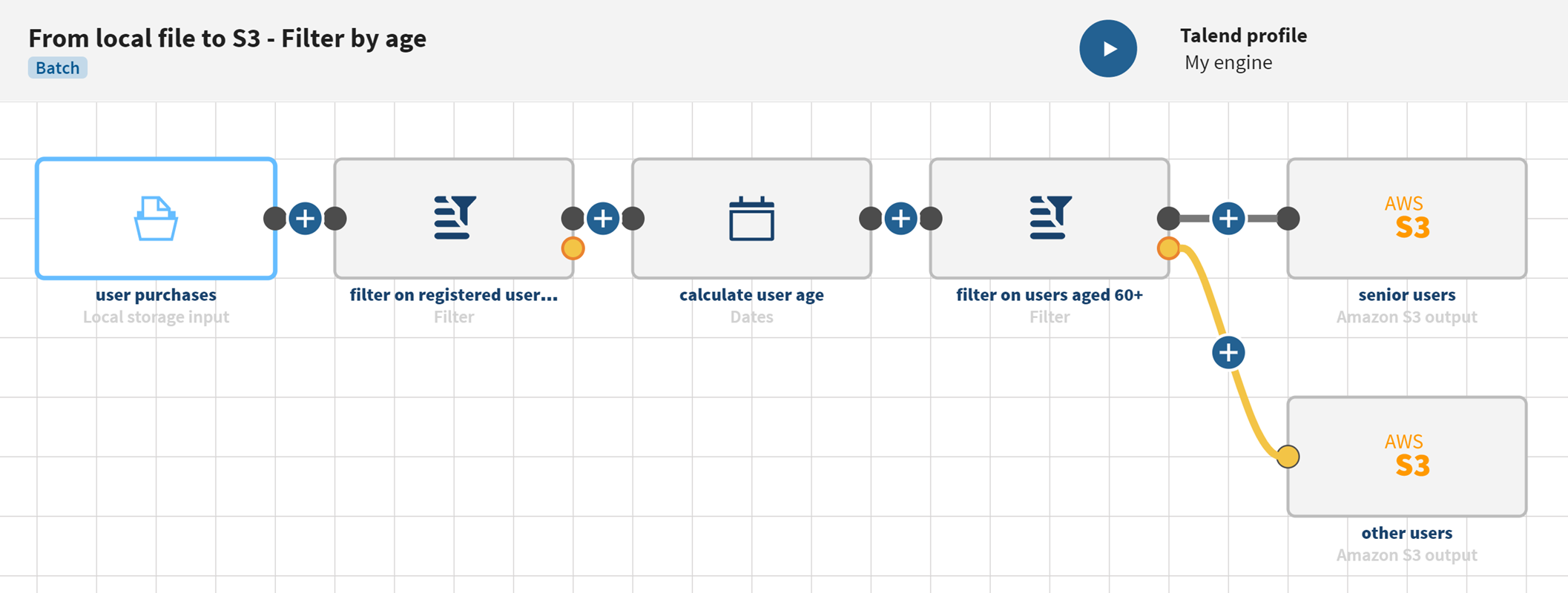

Filtering data from a local file and splitting it into two Amazon S3 outputs

This scenario aims at helping you set up and use connectors in a pipeline. You are advised to adapt it to your environment and use case.

Before you begin

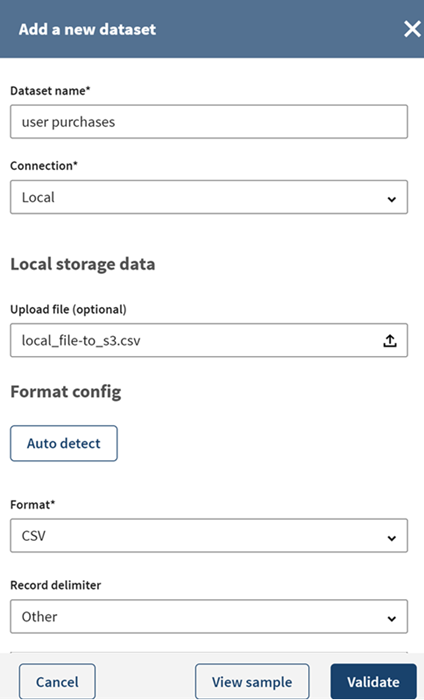

- If you want to reproduce this scenario, download and extract the file: local_file-to_s3.zip . The file contains data about the user purchases with data about registration, purchase price, date of birth, etc.

Procedure

Results

Your pipeline is being executed, the user information that was stored on your local file has been filtered, the user ages have been calculated and the output flows are sent to the S3 bucket you have defined. These different outputs can now be used for separate targeted marketing campaigns for example.

Did this page help you?

If you find any issues with this page or its content – a typo, a missing step, or a technical error – let us know how we can improve!