Configuring a Big Data Streaming Job using the Spark Streaming Framework

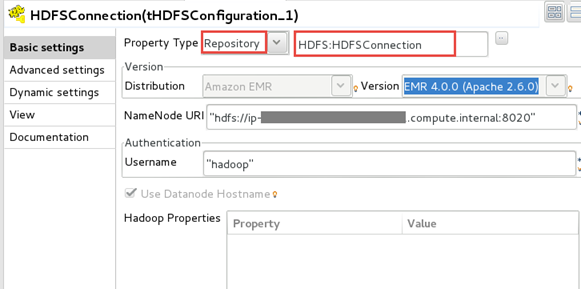

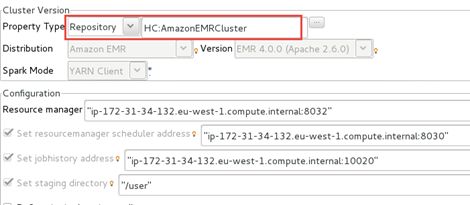

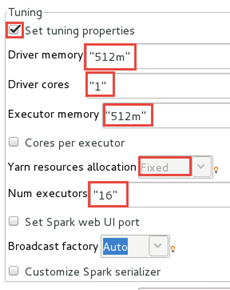

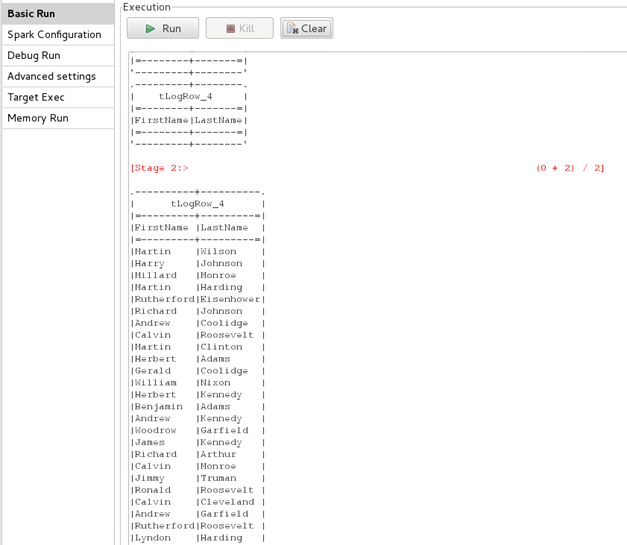

Before running your Job, you need to configure it to use your Amazon EMR

cluster.

Procedure

Did this page help you?

If you find any issues with this page or its content – a typo, a missing step, or a technical error – let us know how we can improve!