What's new in R2022-09

Big Data: new features

|

Feature |

Description |

Available in |

|---|---|---|

| Support for Spark Universal 3.3.x in local mode | You can now run your Spark Batch and Streaming Jobs using Spark Universal

with Spark 3.3.x in Local mode. You can configure it either in the

Spark Configuration view of your Spark Jobs or in the

Hadoop Cluster Connection metadata wizard. Talend Data Mapper Big Data components and Talend Data Quality components were not compatible with Spark 3.3.x at the time of this release, so Spark Universal 3.2.x remains the default version for this patch. Spark 3.3.x is supported from version R2020-10 onwards.

|

All subscription-based Talend products with Big Data |

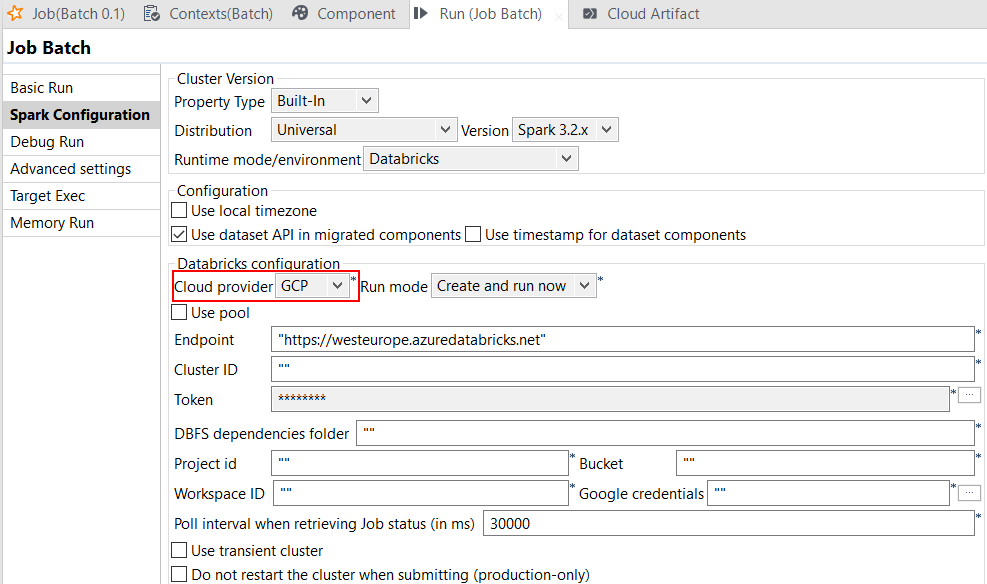

| Support for Databricks runtime 10.x and onwards with GCP on Spark Universal 3.2.x | You can now run your Spark Batch and Streaming Jobs on job and all-purpose

Databricks clusters on Google Cloud Platform (GCP) using Spark Universal with

Spark 3.2.x. You can configure it either in the Spark

Configuration view of your Spark Jobs or in the Hadoop

Cluster Connection metadata wizard. When you select this mode, Talend Studio is compatible with Databricks 10.x and onwards versions.

|

All subscription-based Talend products with Big Data |

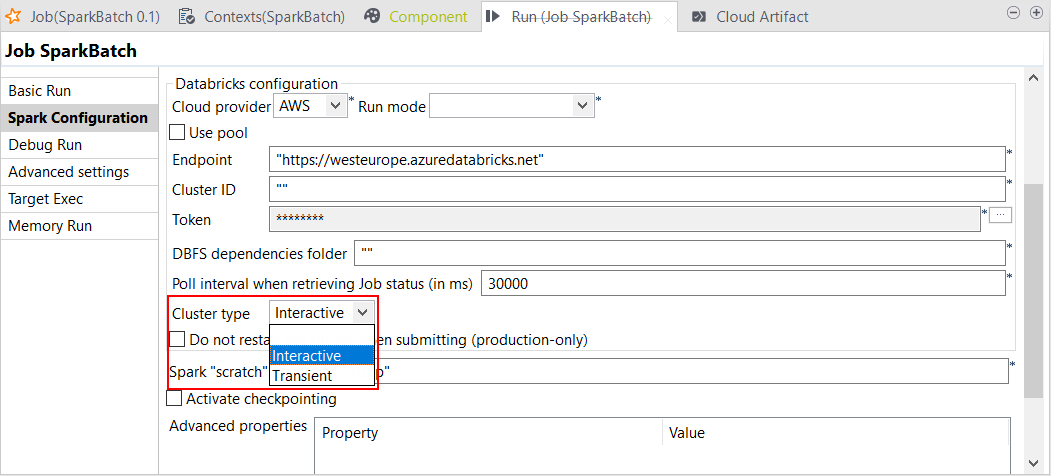

| Support for context group to switch from job to all-purpose clusters on Databricks in Spark Jobs | You can now switch from job to all-purpose clusters on Databricks using

context group. When you configure the connection to the Databricks cluster, you

can now add specific context groups that are used when you run your Spark Jobs.

You can configure it either in the Spark Configuration view

of your Spark Jobs or in the Hadoop Cluster Connection

metadata wizard, but it is recommended to configure it in the Hadoop

Cluster Connection metadata wizard.

|

All subscription-based Talend products with Big Data |

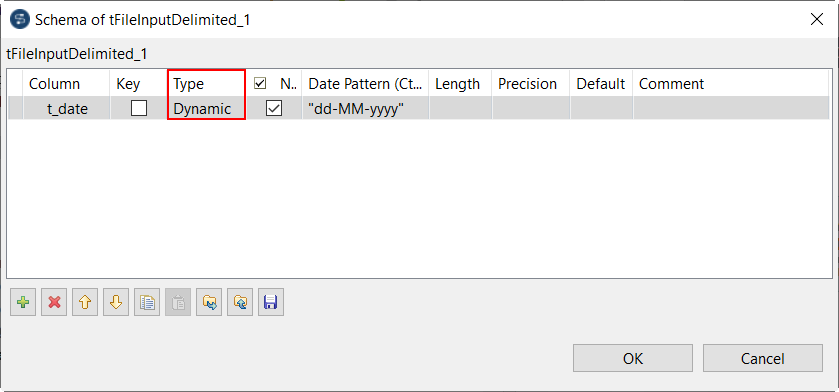

| Enhancement of tFileInputDelimited to support dynamic schema in Spark Batch Jobs | You can now add a dynamic column to the schema of tFileInputDelimited in

your Spark Batch Jobs. The dynamic schema functionality allows you to configure a

schema in a non-static way, so you won't have to redesign your Spark Job for

future schema alteration while ensuring it will work all the time.

|

All subscription-based Talend products with Big Data |

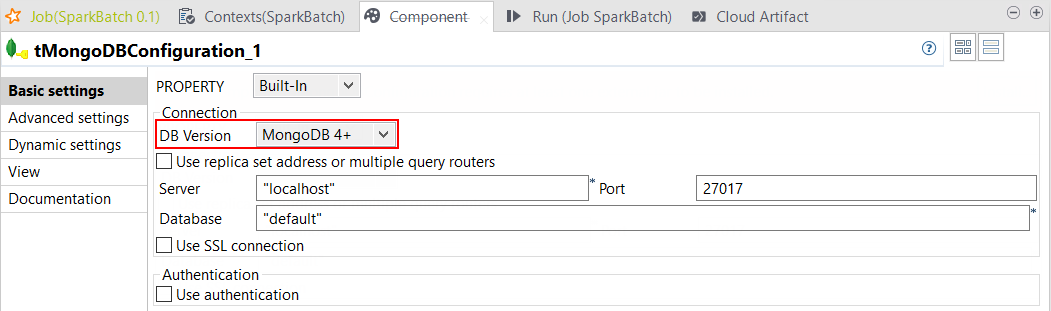

| Support for MongoDB v4+ for Spark Batch 3.1 and onwards |

Talend Studio

now supports MongoDB v4+ with Spark 3.1 and onwards versions for the following

components in your Spark Batch Jobs using Dataset:

|

All subscription-based Talend products with Big Data |

Data Integration: new features

|

Feature |

Description |

Available in |

|---|---|---|

| SSO login to Talend Studio via Talend Cloud | If you are working with Talend Cloud,

you can now log in to Talend Studio

via Talend Cloud,

either with SSO or in the regular way. The login screen provides the following two options:

Information noteNote: Logging in to Talend Studio via Talend Cloud is currently unsupported for Linux on ARM64.

For more information, see Launching Talend Studio.

|

All subscription-based Talend products with Talend Studio |

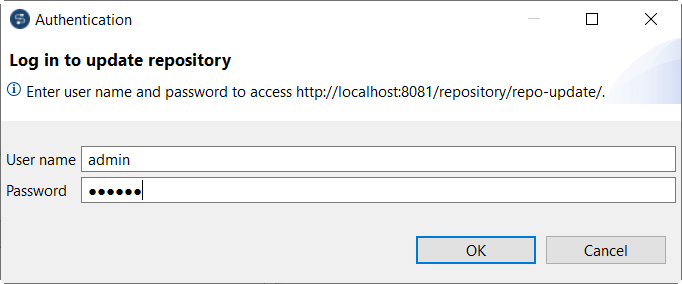

| Support of basic authentication to access update repositories |

Talend Studio

now supports basic authentication for update repositories based on the Eclipse

secure storage. For more information, see Basic authentication for update repositories in Talend Studio.

|

All subscription-based Talend products with Talend Studio |

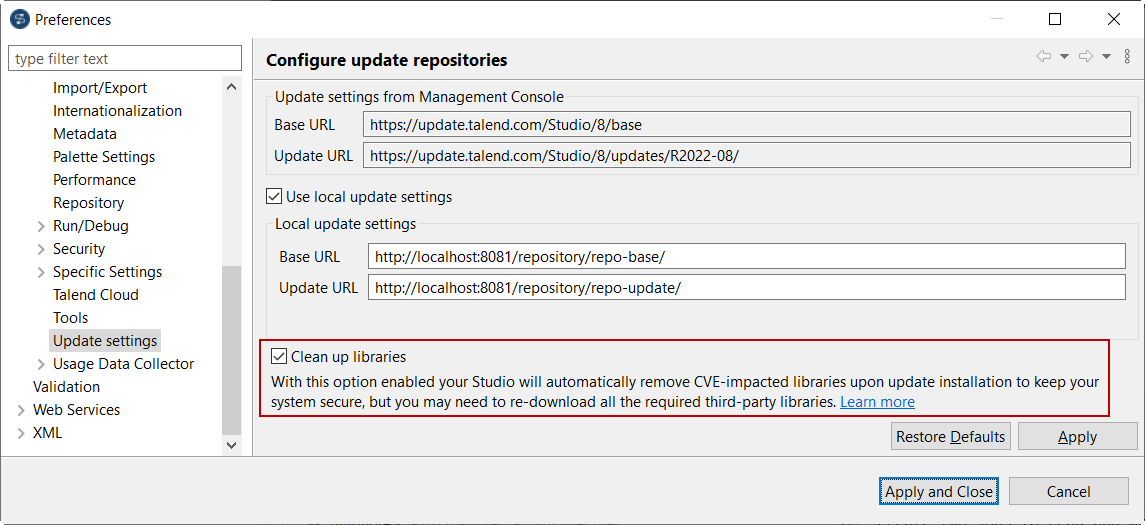

| New option to clean up obsolete libraries upon update installation | You can now configure Talend Studio

to automatically clean up obsolete libraries upon update installation by:

This helps save disk space and reduce noise triggered by security tools scanning for vulnerabilities in libraries. Note that:

For more information, see Updating Talend Studio.

|

All subscription-based Talend products with Talend Studio |

| Email used as Git commit author when working with Talend Cloud | If you are working on a project managed by Talend Management Console, the email instead of the login name in Talend Management Console

is now used as the Git author and committer when committing your changes to Git in

Talend Studio.

|

All Talend Cloud products and Talend Data Fabric |

| New components to connect to Google Bigtable to store or retrieve data |

Talend Studio

now supports the following new components to connect to Google Bigtable to store

or retrieve data:

|

All Talend Platform and Data Fabric products |

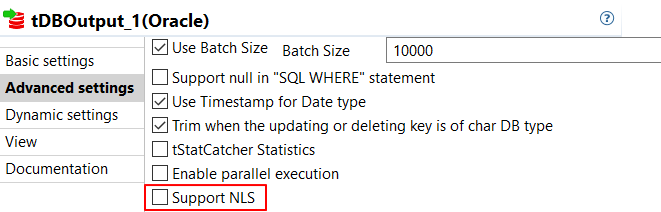

| New option in Oracle components and metadata wizard to add globalization support | Oracle components and the metadata wizard provide the Support

NLS option for enabling globalization support for Oracle 18 and

higher versions.

|

All subscription-based Talend products with Talend Studio |

| New component to write to Workday clients | This release provides the tWorkdayOutput component, which allows you to write data to a Workday client. |

All subscription-based Talend products with Talend Studio |

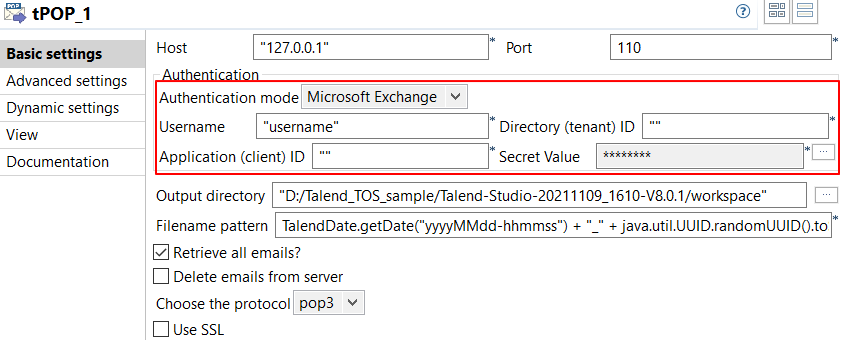

| Support for Microsoft Exchange authentication in tPOP | The tPOP component provides the Microsoft

Exchange authentication mode, which allows you to fetch messages in

the Microsoft Exchange authentication mode.

|

All subscription-based Talend products with Talend Studio |

| ID based pagination for the tDataStewardshipTaskInput component | Activate this option to improve performance when fetching and sorting

Talend Cloud Data Stewardship

tasks.

|

All subscription-based Talend products with Talend Studio |

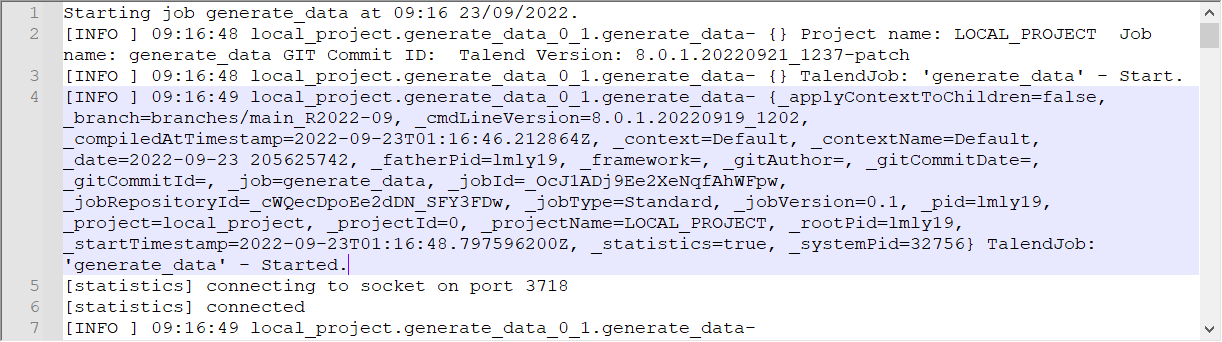

| Extra log attributes via MDC when using Log4j 2 | When using Apache Log4j 2 in your Jobs, the Log4j 2 MDC (Mapped Diagnostic

Context) is now populated with key-value pairs. These extra log attributes help

you easily identify the thread in which log data is generated when all log

messages from multiple threads are written into a single file. For more

information about Log4j 2 MDC, see Log4j 2

API - Thread Context. To include the extra log attributes when writing logs, you need to configure the Log4j 2 template in Talend Studio. For more information, see Activating and configuring Log4j.

|

All subscription-based Talend products with Talend Studio |

Data Mapper: new features

|

Feature |

Description |

Available in |

|---|---|---|

|

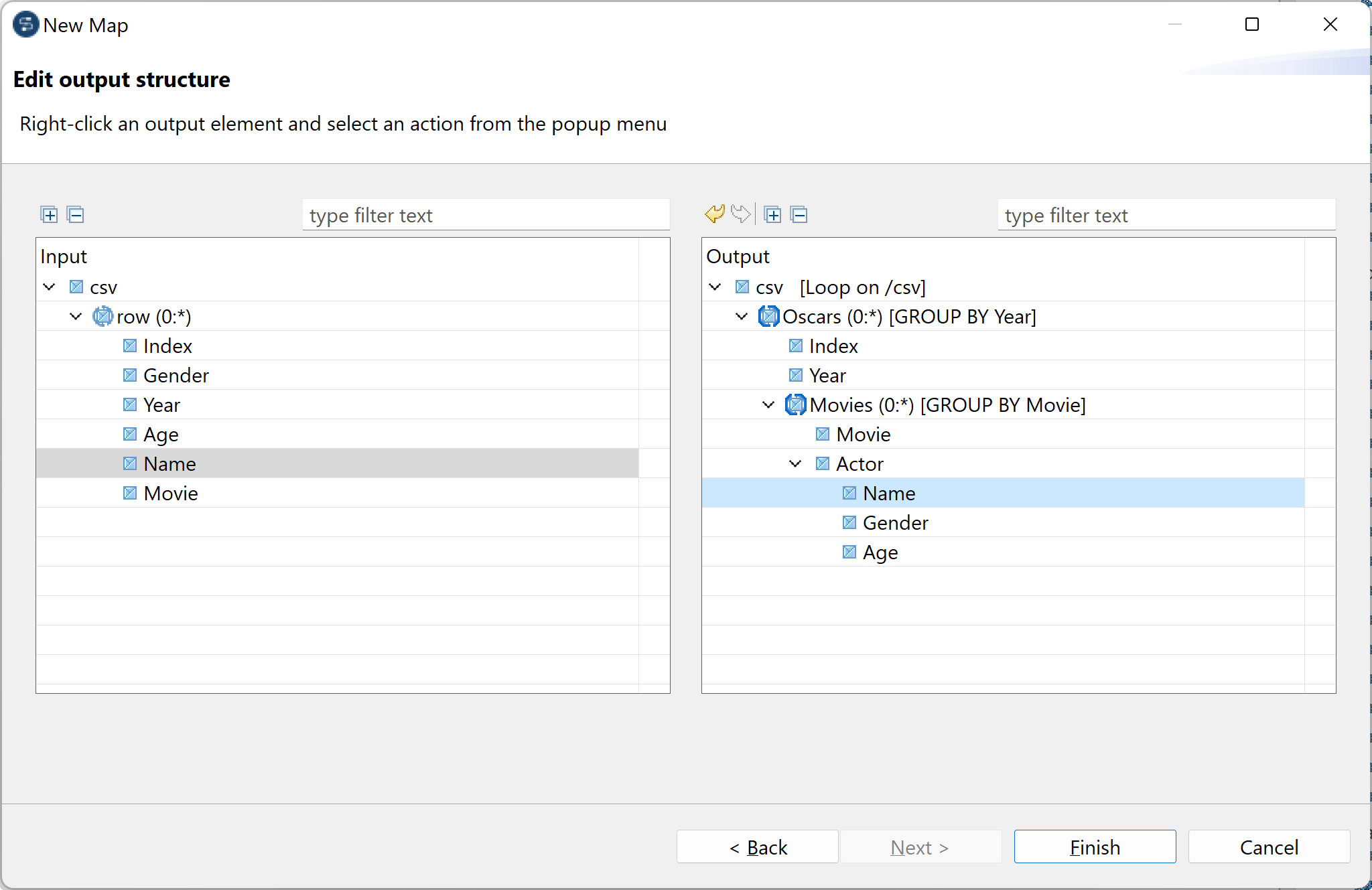

Flat to hierarchical map |

A new type of map allows you to create a hierarchical structure from a flat

structure and map them. You can edit the output structure to create arrays,

records and group your data based on a specific element. Once the output

structure is defined, a map is created and the input is automatically mapped to

the output.

|

All Talend Platform and Data Fabric products |

Data Quality: new features

|

Feature |

Description |

Available in |

|---|---|---|

| Support of Spark 3.2 in local mode and on Databricks | You can now run DQ components using Apache Spark 3.2 in local mode and on

Databricks. A few exceptions:

|

All Talend Platform and Data Fabric products |

Continuous Integration: new features

|

Feature |

Description |

Available in |

|---|---|---|

| Talend CI Builder upgraded to version 8.0.9 |

Talend

CI Builder is upgraded from version 8.0.8 to version 8.0.9. Use Talend CI Builder 8.0.9 in your CI commands or pipeline scripts from this monthly version onwards until a new version of Talend CI Builder is released. |

All subscription-based Talend products with Talend Studio |

| Support of basic authentication for update repositories | If basic authentication is enabled in Talend Studio,

you can now use these parameters in your CI commands to safely access the Talend

update repositories:

For more information, see Basic authentication for update repositories in Talend Studio and CI builder-related Maven parameters. |

All subscription-based Talend products with Talend Studio |

Did this page help you?

If you find any issues with this page or its content – a typo, a missing step, or a technical error – let us know how we can improve!