Crawling for multiple datasets

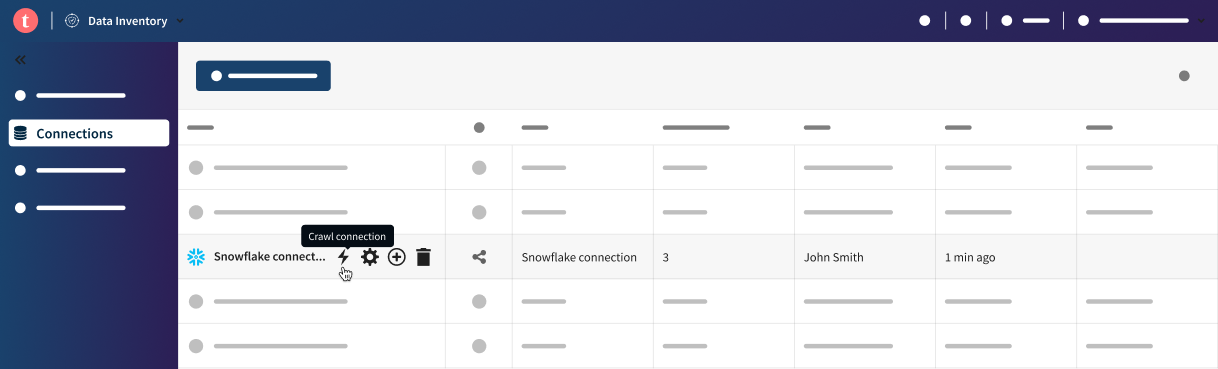

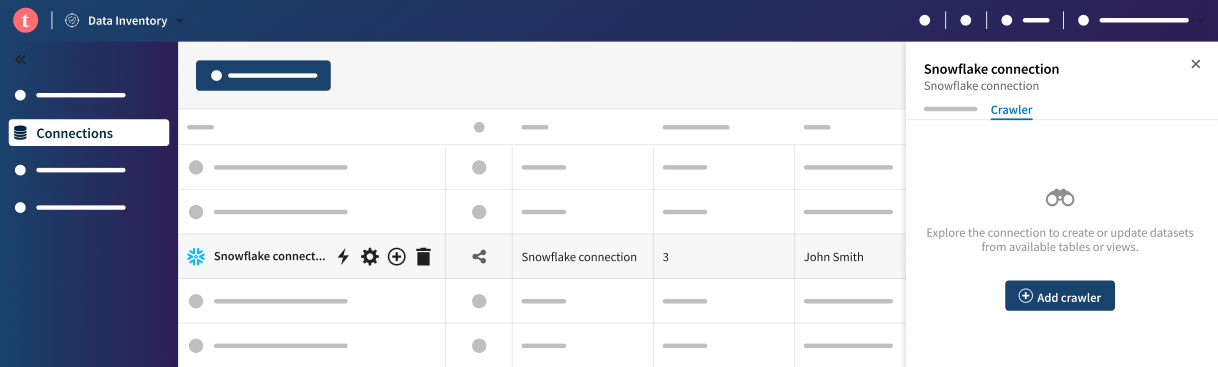

If you need to import numerous datasets from the same source, instead of manually creating them one by one in Talend Cloud Data Inventory, you can create a crawler to retrieve a full list of assets in a single operation.

Crawling a connection allows you to retrieve data at a large scale and enrich your inventory more efficiently. After selecting a connection, you will be able to import all of its content, or part of it via a quick search and filter, and select which users will have access to the newly created datasets.

- The dynamic selection to retrieve all tables that match a specific filter, regardless of the content of your data source at a given time.

- The manual selection to manually select the tables to retrieve from the current state of your data source.

Crawling a connection for multiple datasets comes with the following prerequisites and limitations:

- The Dataset administrator or Dataset manager role has been assigned to you in Talend Management Console, or at least the Crawling - Add permission.

- You are using the Remote Engine 2022-02 or later.

- You can only crawl data from a JDBC connection, and only one crawler can be created from a connection at the same time.

Procedure

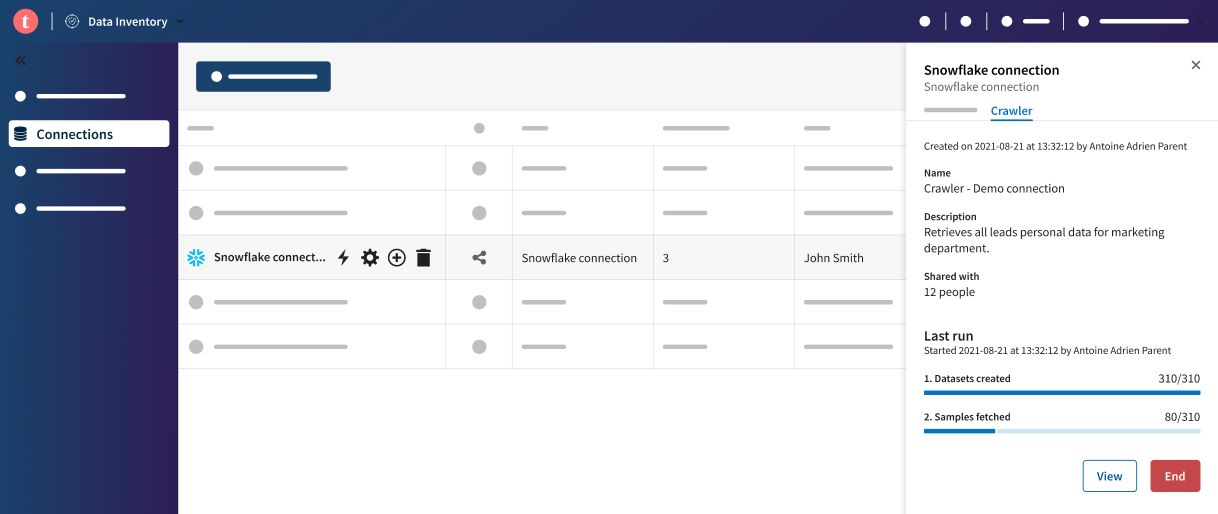

Results

You cannot edit a crawler configuration after it has started running. If the crawler is stopped or finished, you can edit the table selection, name, and description of the crawler. However, you cannot edit the sharing settings. To crawl the connection again with different sharing settings, delete the crawler and create a new one.

It is possible to use a crawler name as facet in the dataset search to see all the datasets linked to a given crawler.

Did this page help you?

If you find any issues with this page or its content – a typo, a missing step, or a technical error – let us know how we can improve!